Geneseo rejects online proctoring. One reason? Coded bias.

17 Feb 2021

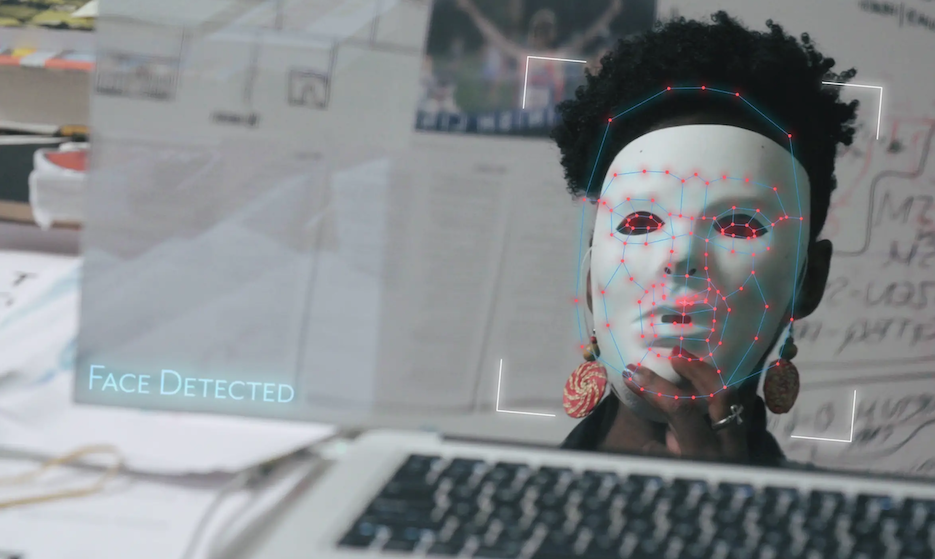

Media kit still from the film Coded Bias

Media kit still from the film Coded Bias

The documentary Coded Bias follows MIT researcher Joy Buolamwini as she explores why facial recognition technology fails to see dark-skinned faces, how biases of various kinds find their way into computer algorithms, and what citizens can do to fight algorithmic injustice.

It’s been available this week for Geneseo campus community members to stream for free as part of the college’s line-up of events for Black History Month.

Tomorrow, our community can also meet the director of Coded Bias, Shalini Kantayaa, who will join us virtually to discuss the film and answer questions. Registering for the Q&A session will get you a link to watch the film.

Together, the screening and visit provide an excellent opportunity to address Geneseo’s stance on a hotly debated topic in education right now: online exam proctoring. Although proctoring isn’t a focus of Kantayya’s film, it uses some of the same methods that the film scrutinizes and raises the same issues that lie at the film’s moral and political heart: issues at the intersection of technology, privacy, autonomy, equity, and social justice.

Geneseo’s decision not to pay for online proctoring services reflects a conviction that these services run afoul of the college’s values and of its recently articulated commitment to antiracism.

Proctoring and the pandemic

When the Covid-19 pandemic forced much of higher education online last spring, concern immediately erupted that students would take advantage of the remote environment to cheat. A new study published in the International Journal for Educational Technology, covered just this month in Inside Higher Ed, lends some validity to that concern by linking the move online to a spike in activity on the website Chegg. On its “About” page, Chegg describes itself as a “student-first connected learning platform,” but as the study’s authors point out, it also provides an easy way for students to “breach academic integrity by obtaining outside help” on assignments or exams they’re expected to complete on their own.

One tool designed to prevent online exam cheating was already available when the concern arose: commercial services that use students’ own computers to monitor their activity while they work. Companies such as ProctorU, Proctorio, Examity, Honorlock, and Respondus either allow a live proctor to watch a student through the student’s camera or capture video of the student and run it through an algorithm to detect evidence of cheating.

Big Brother is watching U.

In April, an article in the Washington Post put its finger on one problem with online proctoring right in the headline: the pandemic, it declared, was “driving a new wave of student surveillance.”

Perhaps the most important word in that headline was “new.” Just a few months earlier, the Post had described how “Colleges are turning students’ phones into surveillance machines, tracking the locations of hundreds of thousands”.

The problem with using what critics routinely call “spyware” to enforce academic integrity — or even simply nudge students toward better academic performance — goes beyond the culture of surveillance it creates and promotes; it touches equally on the question on who profits from that surveillance. As Forbes reported last spring, some University of California Santa Barbara faculty raised a red flag as early as March about “ProctorU’s data collection, retention, and sharing practices.” Spyware that monetizes student data, like all forms of what Harvard Business School professor Shoshana Zuboff calls “surveillance capitalism”, turns students into the raw material for a new kind of commodity, in the process depriving them not only of privacy but autonomy.

Algorithmic injustice

In December 2019, the New York Times reported on a study by the National Institute of Standards and Technology that showed most commercial facial recognition systems are biased, falsely identifying African American and Asian faces at rates 10 to 100 times greater than white ones, and exhibiting the highest error rates when identifying Native Americans. The systems in the study also had more difficulty identifying women than men and identifying older adults than middle-aged ones.

Earlier that same year, Nijeer Parks, a Black man, had been arrested on suspicion of shoplifting and trying to assault a police officer at a Hampton Inn in Woodbridge, NJ. Although miles from the location of the incident when it happened, Parks had been falsely identified as the perpetrator through the use of facial recognition technology. He became the third Black man in the U.S. known to have been incorrectly arrested based on flawed facial recognition.

The following year, the Times reported on the facial recognition company Clearview AI, branding it “The Secretive Company that Might End Privacy as We Know It” and pointing out that “without public scrutiny, more than 600 law enforcement agencies have started using Clearview in the past year.” The article noted the tendency of the company’s software to produce false matches.

At the end of 2020, a year after the Times had first reported on the Parks case, Parks sued the police, the prosecutor, and the City of Woodbridge for false arrest, false imprisonment, and violation of his civil rights.

Clearview AI’s facial recognition app had been used in his case.

Coded bias

The “AI” in Clearview’s name stands for “artificial intelligence,” the broad class of software processes and products based on the ability of computers to learn on their own rather than follow a predetermined algorithm. One way they can learn is by working repetitively through reams of data and gradually improving their ability to perform the task that’s been set for them.

This process — also known as “machine learning” — may sound like one of pure logic, freed from the flawed judgments, unexamined prejudices, and social privilege of the humans who set it in motion, but it’s not. Well before the federal government’s report on the racial bias built into facial recognition, alarms were sounding about the use of AI in a growing list of contexts, including lending, real estate, hiring, internet search, and the criminal justice system. Books such as Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy (2016), by Cathy O’Neil, and Algorithms of Oppression: How Search Engines Reinforce Racism (2018), by Safiya Noble, explained how machine learning algorithms can encode not only the bias of the coders who write them but the systemic inequities underlying the data on which the algorithms are trained.

Similar concerns were raised by an MIT graduate student, Joy Buolamwini — the protagonist of Coded Bias — who discovered that she had to put on a white mask to get a facial recognition system to see her face. She gave a TEDTalk on her ensuing research that drew a large audience (views to date: 1,363,950), and she founded the Algorithmic Justice League, aimed at “building a movement to shift the AI ecosystem towards equitable and accountable AI.”

In 2018, Buolamwini and AI researcher Timnit Gebru, then at Microsoft, published “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification” in Proceedings of Machine Learning. Buolamwini and Gebru tested facial recognition systems developed by IBM, Microsoft, and Megvii of China against a data set of 1,270 diverse faces and found a huge disparity between the systems’ error rates when recognizing lighter-skinned males and darker-skinned women, respectively.

A question of integrity

Since proctoring software may rely on facial recognition, its potential harms include not only loss of privacy and autonomy, then, but also discrimination.

Just this month, in fact, concern over the disparate impact of facial recognition technology on women and people of color led the Lawyers’ Committee for Civil Rights Under Law to threaten suit against the State Bar of California unless it stops using the technology to help prevent cheating by students taking the bar exam online.

As six U.S. senators point out in a letter to the CEO of the company providing monitoring for the bar exam, students from a variety of institutions, across a range of testing situations, have reported unsettling instances of technology-based discrimination, including being unrecognizable to the software because of religious dress (such as a headscarf) and being flagged as “suspicious” because of a disability or other physical condition.

The legal challenges to online proctoring are sure to grow, and as they do, colleges and universities that have subscribed to proctoring services may well change their minds about the tradeoff between the integrity of their values and the value of these services to secure the academic integrity of their students.

But no institution of higher learning that promotes itself as student-centered, equity-minded, community-driven, pledged to antiracism, committed to justice for the marginalized and underrepresented, and organized to produce independent-thinking, autonomous graduates should require a legal challenge in order to reject a flawed and biased surveillance technology as its guarantor of honesty and fairness.

Not that honesty and fairness don’t matter — these, too are important educational values. And not that cheating isn’t a geniune problem. But there are ways to foster honesty and obtain fairness that not only avoid sacrificing other values but are also, arguably, more conducive to learning.

These other approaches seek to give students less reason for cheating to begin with. I’ll write about them in a future post. They’re preferable by far to the surveillance approach that Geneseo has wisely refused.